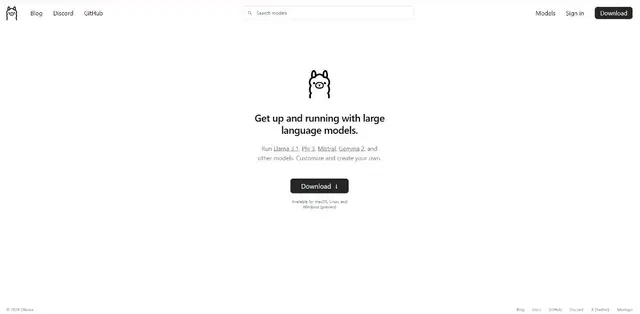

Ollama

What is Ollama?

Ollama is a lightweight tool designed to run large language models locally on your computer. It simplifies the process of deploying and managing AI models like Llama 3.3, Phi 4, Mistral, and Gemma 2 on personal machines.

Top Features:

- Local Model Deployment: run advanced language models directly on your machine without cloud dependencies.

- Cross-Platform Support: works smoothly across macOS, Linux, and Windows operating systems.

- Model Customization: modify and create personalized versions of language models for specific needs.

Pros and Cons

Pros:

- Privacy: all processing happens locally, keeping your data on your machine.

- No Internet Required: use AI models offline once downloaded to your system.

- Simple Interface: straightforward setup process for running complex language models.

Cons:

- Hardware Requirements: needs substantial computing power for optimal performance.

- Storage Space: language models can take up significant disk space.

- Limited Features: fewer options compared to cloud-based AI platforms.

Use Cases:

- Development: integrate AI capabilities into local development environments and applications.

- Research: experiment with language models in controlled, offline environments.

- Education: learn about AI models through hands-on experience on personal computers.

Who Can Use Ollama?

- Developers: programmers looking to implement AI features in their applications.

- Researchers: academics and scientists working with language models.

- Tech enthusiasts: individuals interested in exploring AI technology locally.

Pricing:

- Free: open-source software available at no cost.

- No Subscription: download and use without recurring payments.

Our Review Rating Score:

- Functionality and Features: 4/5

- User Experience (UX): 4/5

- Performance and Reliability: 3.5/5

- Scalability and Integration: 3.5/5

- Security and Privacy: 5/5

- Cost-Effectiveness and Pricing Structure: 5/5

- Customer Support and Community: 3.5/5

- Innovation and Future Proofing: 4/5

- Data Management and Portability: 4/5

- Customization and Flexibility: 4/5

- Overall Rating: 4.1/5

Final Verdict:

Ollama brings AI capabilities to your local machine with impressive privacy features and zero cost. While it requires decent hardware, its ability to run advanced language models offline makes it an excellent choice for developers and researchers.

FAQs:

1) How much RAM do I need to run Ollama?

The RAM requirements vary by model, but generally, 16GB RAM is recommended for optimal performance with most models.

2) Can I use Ollama without an internet connection?

Yes, after downloading the models, Ollama works completely offline.

3) What programming languages does Ollama support?

Ollama provides APIs that can be integrated with various programming languages including Python, JavaScript, and Go.

4) Are the models in Ollama regularly updated?

Yes, new model versions are released periodically and can be updated through the command line interface.

5) Can I run multiple models simultaneously?

Yes, you can run multiple models simultaneously, but this will require more system resources.

Stay Ahead of the AI Curve

Join 76,000 subscribers mastering AI tools. Don’t miss out!

- Bookmark your favorite AI tools and keep track of top AI tools.

- Unblock premium AI tips and get AI Mastery's secrects for free.

- Receive a weekly AI newsletter with news, trending tools, and tutorials.