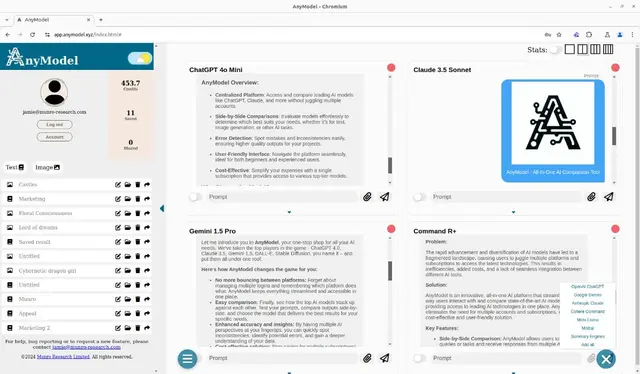

Groq

What is Groq?

Groq is an AI inference platform that powers fast execution of open-source AI models. It stands out for its lightning-fast processing capabilities and simple integration process, making AI model deployment quick and efficient.

Top Features:

- OpenAI Compatibility: switch from OpenAI to Groq by changing just three lines of code.

- Instant Processing: delivers rapid inference speeds for popular open-source AI models.

- LLM Support: runs advanced language models like Llama 3.1 with exceptional speed.

Pros and Cons

Pros:

- Speed: exceptional processing speed validated by independent benchmarks.

- Easy Migration: minimal code changes needed when switching from other AI providers.

- Model Variety: supports multiple open-source AI models for different applications.

Cons:

- Limited History: relatively new platform with February 2024 launch date.

- Model Restrictions: currently focuses only on open-source AI models.

- Market Position: faces tough competition from established AI infrastructure providers.

Use Cases:

- AI Development: building and deploying AI applications with minimal latency.

- Research Projects: running complex AI models for academic and scientific research.

- Enterprise Solutions: implementing AI solutions in business environments.

Who Can Use Groq?

- Developers: professionals looking to integrate AI capabilities into their applications.

- Researchers: academics and scientists working with AI models and data analysis.

- Organizations: companies seeking fast AI inference solutions for their operations.

Pricing:

- Free Trial: information not available at launch.

- Pricing Plan: details pending public release.

Our Review Rating Score:

- Functionality and Features: 4.5/5

- User Experience (UX): 4.0/5

- Performance and Reliability: 4.8/5

- Scalability and Integration: 4.2/5

- Security and Privacy: 4.0/5

- Cost-Effectiveness and Pricing Structure: N/A

- Customer Support and Community: 3.5/5

- Innovation and Future Proofing: 4.5/5

- Data Management and Portability: 4.0/5

- Customization and Flexibility: 4.0/5

- Overall Rating: 4.2/5

Final Verdict:

Groq brings impressive speed to AI inference, making it a strong choice for developers who prioritize performance. While new to the market, its technical capabilities and simple integration process make it worth considering for AI applications.

FAQs:

1) How does Groq compare to other AI inference platforms?

Groq distinguishes itself through superior processing speeds and simple integration, particularly for open-source models.

2) What types of AI models can I run on Groq?

Groq currently supports open-source models, with specific focus on language models like Llama 3.1.

3) Is technical expertise required to use Groq?

Basic development knowledge is needed, but the platform simplifies integration with just three code line changes.

4) What makes Groq's processing speed different?

Groq uses specialized chip architecture designed specifically for AI inference, resulting in faster processing times.

5) Can I migrate my existing AI applications to Groq?

Yes, Groq provides compatibility with OpenAI's API, making migration straightforward for existing applications.

Stay Ahead of the AI Curve

Join 76,000 subscribers mastering AI tools. Don’t miss out!

- Bookmark your favorite AI tools and keep track of top AI tools.

- Unblock premium AI tips and get AI Mastery's secrects for free.

- Receive a weekly AI newsletter with news, trending tools, and tutorials.