Vellum

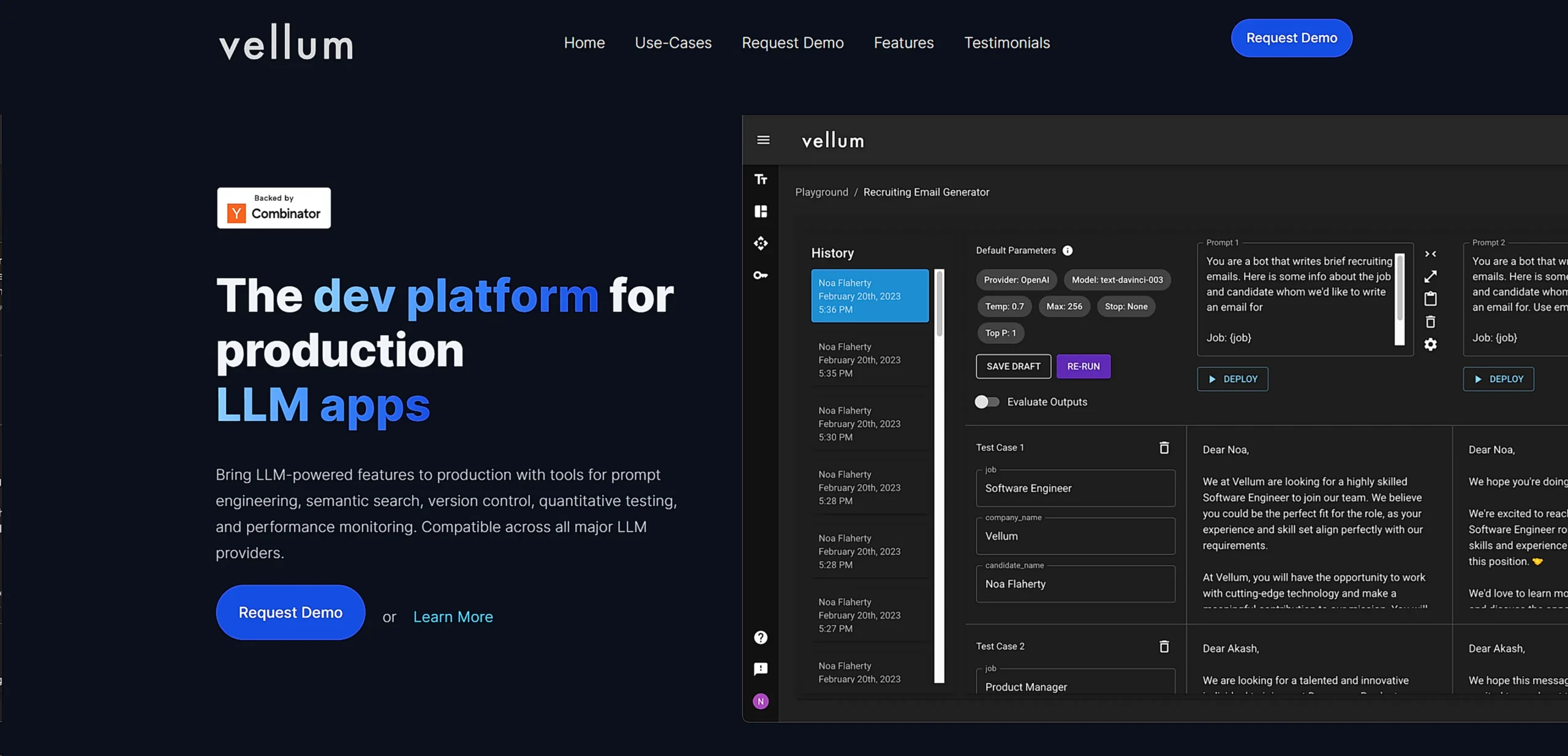

What is Vellum?

Vellum is an AI development platform that streamlines the creation and deployment of LLM-powered features. It combines experimentation tools, version control, testing capabilities, and performance monitoring to help teams build reliable AI systems quickly.

Top Features:

- Rapid Iteration: test different prompts, models, and architectures through an intuitive UI or SDK.

- Version Control: make controlled changes to prompts without modifying underlying code structure.

- Quality Monitoring: track performance metrics and user feedback to optimize AI system output.

- Semantic Search: integrate company-specific context into prompts without managing search infrastructure.

Pros and Cons

Pros:

- Speed: reduces AI development time by 50% through streamlined testing and deployment.

- Accessibility: enables non-technical team members to experiment with AI features independently.

- Support: provides responsive customer service and implementation guidance when needed.

Cons:

- Learning Curve: some advanced workflows require time to master effectively.

- Complex Setup: initial configuration may need technical expertise for optimal results.

- Limited Templates: could benefit from more pre-built templates for common use cases.

Use Cases:

- AI Product Development: build and test LLM-powered features with rapid iteration cycles.

- Quality Assurance: implement automated testing to maintain consistent AI system performance.

- Production Monitoring: track and optimize AI features in live environments.

Who Can Use Vellum?

- Development Teams: engineers working on AI-powered applications and features.

- Product Managers: professionals managing AI product development and deployment.

- Enterprise Organizations: companies scaling AI capabilities across multiple markets.

Pricing:

- Free Trial: available upon request through their website.

- Custom Pricing: based on usage and specific business requirements.

Our Review Rating Score:

- Functionality and Features: 4.5/5

- User Experience (UX): 4.2/5

- Performance and Reliability: 4.7/5

- Scalability and Integration: 4.4/5

- Security and Privacy: 4.6/5

- Cost-Effectiveness: 4.3/5

- Customer Support: 4.8/5

- Innovation: 4.5/5

- Data Management: 4.4/5

- Customization: 4.3/5

- Overall Rating: 4.5/5

Final Verdict:

Vellum stands out as a powerful AI development platform that significantly speeds up the creation and deployment of LLM features. While it requires some technical knowledge, its comprehensive toolset and excellent support make it a worthwhile investment.

FAQs:

1) How does Vellum compare to direct API integration?

Vellum adds a management layer that includes version control, testing, and monitoring capabilities not available through direct API integration.

2) What LLM providers does Vellum support?

Vellum works with all major LLM providers including OpenAI, Anthropic, Google, and others.

3) Is technical expertise required to use Vellum?

Basic technical knowledge is helpful, but non-technical team members can use many features through the UI.

4) How long does it take to implement Vellum?

Basic implementation takes less than a day, with full integration possible within a week.

5) Does Vellum affect API response times?

Vellum's architecture minimizes latency, with many users reporting improved response times after implementation.

Stay Ahead of the AI Curve

Join 76,000 subscribers mastering AI tools. Don’t miss out!

- Bookmark your favorite AI tools and keep track of top AI tools.

- Unblock premium AI tips and get AI Mastery's secrects for free.

- Receive a weekly AI newsletter with news, trending tools, and tutorials.